Private Local LlamaOCR with a User-Friendly Streamlit Front-End

- Dwain Barnes

- Nov 20, 2024

- 3 min read

Updated: Nov 21, 2024

Optical Character Recognition (OCR) is a powerful tool for extracting text from images, and with the rise of multimodal AI models, it's now easier than ever to implement locally. In this guide, we'll show you how to build a professional OCR application using Llama 3.2-Vision, Ollama for the backend, and Streamlit for the front end.

Prerequisites

Before we start, ensure you have the following:

Python 3.10 or higher installed.

Anaconda (Optional)

Ollama installed for local model hosting. Download Ollama here.

Basic Python knowledge to set up the project.

Streamlit installed for the user-friendly front end.

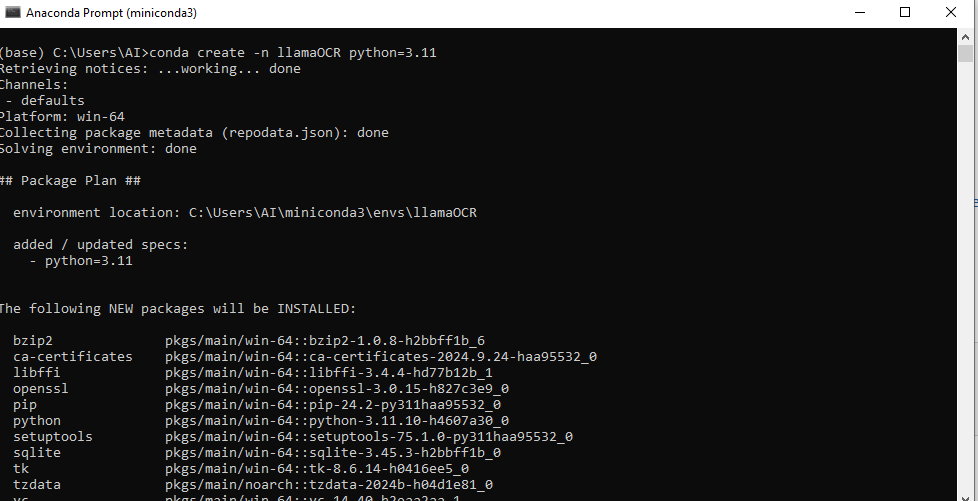

Create an anaconda enviroment (Optional)

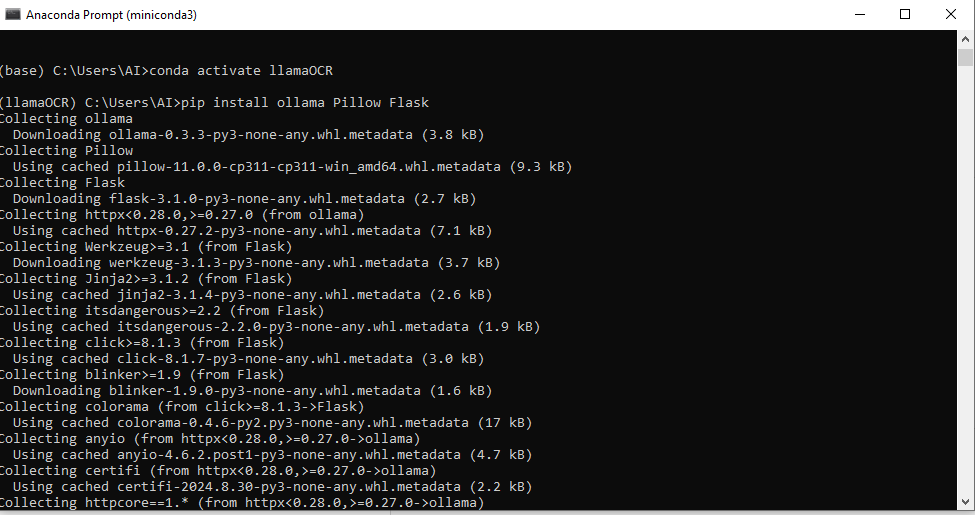

activate the enviroment (optional)

To install required libraries, run:

pip install ollama streamlit PillowStep 1: Install and Set Up Ollama

Ollama allows you to run LLM models like Llama 3.2-Vision locally.

Install Ollama following instructions from my blog post here

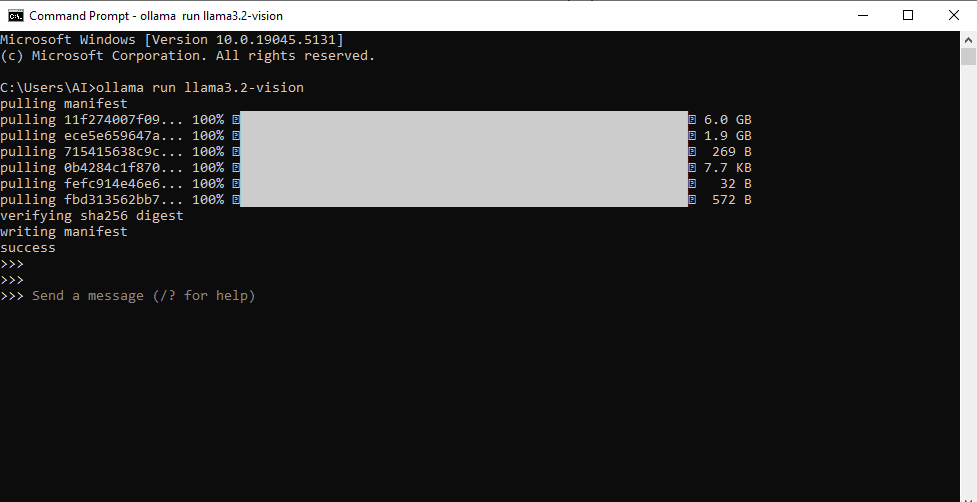

Load the Llama 3.2-Vision model by running:

ollama pull llama3.2-vision

Once installed, Ollama will host the model on your local machine, ready to process images.

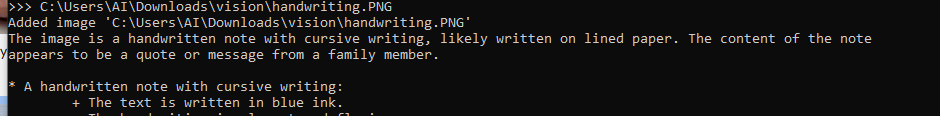

let's test it by draging an image to the prompt.

Step 2: Build the Backend with Streamlit

Streamlit makes it easy to build interactive, aesthetically pleasing web applications. The following Python script handles image uploads, processes them using Llama 3.2-Vision, the Ollama API and displays the OCR results.

Streamlit Script: ocr_app.py

import os

import ollama

import streamlit as st

from PIL import Image

# Constants

UPLOAD_FOLDER = "uploads"

SYSTEM_PROMPT = """Act as an OCR assistant. Analyze the provided image and:

1. Recognize all visible text in the image as accurately as possible.

2. Maintain the original structure and formatting of the text.

3. If any words or phrases are unclear, indicate this with [unclear] in your transcription.

Provide only the transcription without any additional comments."""

# Ensure the upload folder exists

if not os.path.exists(UPLOAD_FOLDER):

os.makedirs(UPLOAD_FOLDER)

def perform_ocr(image_path):

"""Perform OCR on the given image using Llama 3.2-Vision through the Ollama library."""

try:

response = ollama.chat(

model='llama3.2-vision',

messages=[{

'role': 'user',

'content': SYSTEM_PROMPT,

'images': [image_path]

}]

)

return response.get('message', {}).get('content', "")

except Exception as e:

st.error(f"An error occurred during OCR processing: {e}")

return None

# Streamlit app

st.set_page_config(

page_title="LlamaOCR - Local OCR Tool",

page_icon="📄",

layout="centered"

)

st.title("📄 LlamaOCR - Local OCR Tool")

st.write("Effortlessly extract text from images using the power of **Llama 3.2-Vision**. Upload an image to get started!")

uploaded_file = st.file_uploader("Upload an Image", type=["jpg", "jpeg", "png", "gif"])

if uploaded_file:

# Save uploaded file

file_path = os.path.join(UPLOAD_FOLDER, uploaded_file.name)

with open(file_path, "wb") as f:

f.write(uploaded_file.getbuffer())

# Display uploaded image

st.subheader("Uploaded Image:")

image = Image.open(file_path)

st.image(image, caption="Your Uploaded Image", use_column_width=True)

# Perform OCR

with st.spinner("Performing OCR..."):

ocr_result = perform_ocr(file_path)

if ocr_result:

st.subheader("OCR Result:")

st.text_area("Extracted Text", ocr_result, height=200)

else:

st.error("OCR failed. Please try again.")

else:

st.info("Please upload an image to begin.")

# Add footer

st.markdown(

"""

---

**LlamaOCR** | Built with ❤️ using [Streamlit](https://streamlit.io) and **Llama 3.2-Vision**.

"""

)

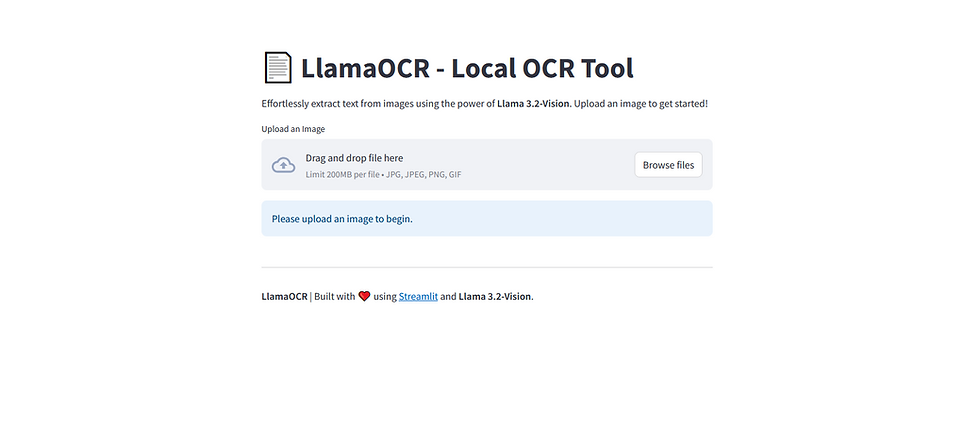

Features of the App

User-Friendly Interface:

Drag-and-drop image upload functionality.

Displays the uploaded image for review.

Extracted text is presented in an editable text area.

Professional Layout:

A clean and modern design using Streamlit.

Simple and intuitive workflow for users.

Error Handling:

Graceful handling of missing files or OCR issues with error messages.

Real-Time Results:

Uses Llama 3.2-Vision locally, providing fast processing without relying on external APIs.

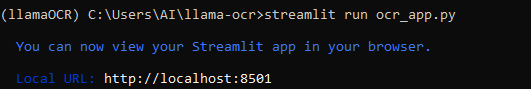

Step 3: Run the App

To run the Streamlit app:

Save the script as ocr_app.py.

Run the following command in your terminal:

streamlit run ocr_app.py

Open the app in your browser at http://localhost:8501.

Step 4: Test Your App

Upload an image with text (e.g., a photo of a document or a screenshot).

View the extracted text in the provided text box.

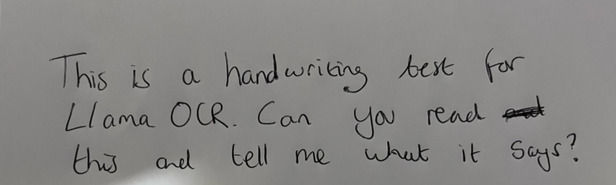

Here is the example document we will be uploading

Lets upload and test the OCR system.

Success! The program runs and works, all thought not perfect, it looks good!

Conclusion

By combining Llama 3.2-Vision for OCR processing with the intuitive user interface of Streamlit, you’ve built a professional-grade OCR application. This tool runs entirely on your local machine, ensuring privacy and security. Its easy-to-use interface makes it perfect for personal or professional use cases like digitising documents or extracting text from images.

Whether you're a developer or an end-user, LlamaOCR provides a reliable, fast, and user-friendly solution for OCR tasks. Experiment further to add features like text formatting, saving results, or multi-image processing.

Check the code out on my GitHub

Start digitising with LlamaOCR today!